Agentic Digital

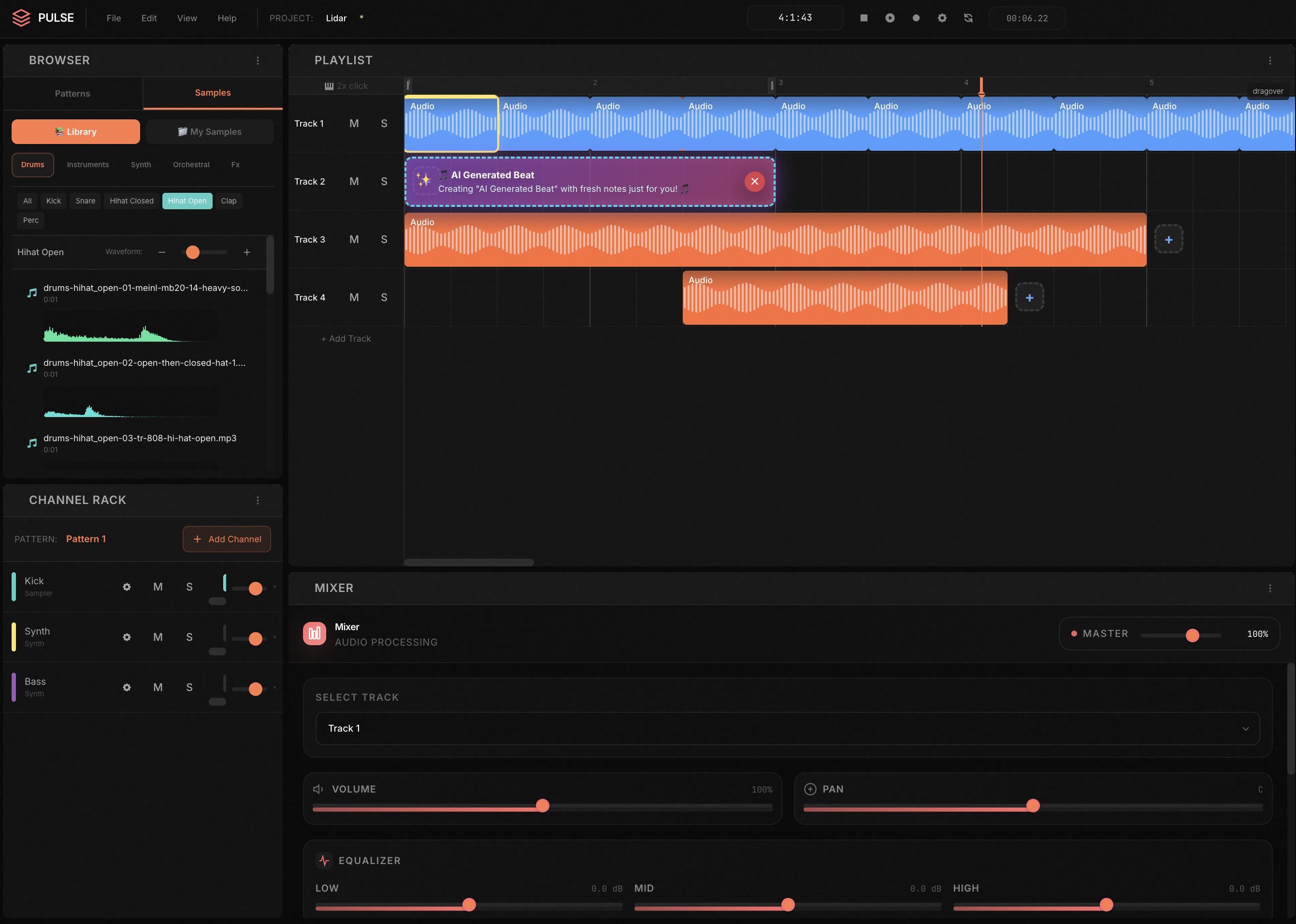

Audio Workstation

Designed for autonomous music systems and structured creativity.

An always-on AI music agent

An AI music agent that can listen, analyze, and refine your track autonomously.

Scale creativity without scaling effort

Autonomously analyzes musical context, resolves common mix and arrangement issues, and handles complex decisions in minutes.

Create with intent and control

Approvals, diffs, and a full change history keep every musical decision reversible and aligned with your creative vision.

Evolving musical agents

Continuously learns your taste, references, and workflow across projects to make better musical decisions over time.

Perfect handoffs for uninterrupted flow

Delivers full musical context with proposed changes and rationale so you never lose momentum or creative intent.

Autonomous validation on every change

Continuous, music-aware simulations run on each edit to verify groove, balance, and structure before render.

Auto-generate musical tests

Creates validation scenarios from your latest musical changes.

Catch regressions early

Verifies groove, balance, and dynamics on every edit.

Render with confidence

Clear pass/fail signals before export.

Native session integration

Results appear directly in your timeline.

Pulse builds a memory of every creative decision,

so your music evolves instead of repeating itself.

Private and creator-first

Built for artists and studios who demand control, privacy, and trust by default.

Human-in-the-loop control

Set approval rules so agents only act when and how you want them to.

Professional-grade reliability

Designed for real sessions, real deadlines, and production-grade workflows.

Private by design

Your music never trains public models. You own every output, always.

Local, cloud, or hybrid

Run agents locally, in the cloud, or both — fully under your control.

Introducing Sim-1

Our smartest models capable of simulating how code runs

A new category of models built to understand and predict how large codebases behave in complex, real-world scenarios.

Research>80% Reduction in the average time to resolution by running tickets through PlayerZero

Cayuse achieves significant efficiency gains by automating ticket triage and resolution workflows.

Case StudyWhat is Predictive Software Quality? Software Operations in the AI Era

A new, AI-powered approach to operating software reliably that anticipates how code will behave before deployment.

Resources